Pandas

{Back to Index}

Table of Contents

- 1. 核心数据结构创建与基本操作

- 2. 索引

- 3. 数据清洗

- 4. 数据规整:连接,联合,重塑

- 5. 聚合与分组操作

- 6. 时间序列

- 7. 移动窗口函数

- 8. 实用方法

1 核心数据结构创建与基本操作

1.1 索引对象

Pandas 中的索引对象适用于存储标签和其他元数据的。

索引对象是不可变的。

s = pd.Series(range(3), index=list('abc'))

index = s.index

log("index", index)

log("index[1:]", index[1:])

try:

index[1] = 'd' # TypeError

except Exception as e:

log("TypeError", e)

==================================== index ===================================== Index(['a', 'b', 'c'], dtype='object') ================================== index[1:] =================================== Index(['b', 'c'], dtype='object') ================================== TypeError =================================== Index does not support mutable operations

1.2 Panel

Panel 是三维带标签的数组。

Panel 由三个标签组成:

- items

- 坐标轴 0 ,索引对应的元素是一个 DataFrame

- major_axis

- 坐标轴 1 , DataFrame 里的行标签

- minor_axis

- 坐标轴 2 , DataFrame 里的列标签

1.3 Series

Series 是一维带标签的数组,数组里可以放任意的数据(整数,浮点数,字符串,Python Object)。

其基本的创建函数是: pd.Series(data, index=index)

其中 index 是一个列表,用来作为数据的标签。data 可以是不同的数据类型:

- Python 字典

- ndarray 对象

- 一个标量值,如 5

1.3.1 创建

1.3.1.1 从 ndarray 创建

s = pd.Series(np.random.randn(5), index=['a', 'b', 'c', 'd', 'e'])

log("s", s)

log("s.index", s.index)

s2 = pd.Series(np.random.randn(5))

log("s2", s2)

log("s2.index", s2.index)

====================================== s ======================================= a 1.048359 b -0.206651 c 0.875843 d 0.417848 e 1.849956 dtype: float64 =================================== s.index ==================================== Index(['a', 'b', 'c', 'd', 'e'], dtype='object') ====================================== s2 ====================================== 0 -0.298220 1 -0.550852 2 -0.558227 3 -1.762704 4 -1.475406 dtype: float64 =================================== s2.index =================================== RangeIndex(start=0, stop=5, step=1)

1.3.1.2 从字典创建

d = {'a' : 0., 'b' : 1., 'd' : 3}

s = pd.Series(d, index=list('abcd'))

log("s", s)

====================================== s ======================================= a 0.0 b 1.0 c NaN d 3.0 dtype: float64

1.3.1.3 从标量创建

s = pd.Series(3, index=list('abcde'))

log("s", s)

====================================== s ======================================= a 3 b 3 c 3 d 3 e 3 dtype: int64

1.3.2 基本操作

1.3.2.1 赋值

s = pd.Series([4, 7, -5, 3], index=['d', 'b', 'a', 'c'])

log("s", s)

s['b':'a'] = 5

log("s", s)

====================================== s ======================================= d 4 b 7 a -5 c 3 dtype: int64 ====================================== s ======================================= d 4 b 5 a 5 c 3 dtype: int64

1.3.2.2 与标量相乘

s = pd.Series([4, 7, -5, 3], index=['d', 'b', 'a', 'c'])

log("s * 2", s * 2)

==================================== s * 2 ===================================== d 8 b 14 a -10 c 6 dtype: int64

1.3.2.3 应用数学函数

s = pd.Series([4, 7, -5, 3], index=['d', 'b', 'a', 'c'])

log("np.exp(s)", np.exp(s))

================================== np.exp(s) =================================== d 54.598150 b 1096.633158 a 0.006738 c 20.085537 dtype: float64

1.3.2.4 映射 (apply)

s.apply(value_to_value_func) -> Series

1.3.2.5 排序(sort_index/sort_values)

s = pd.Series(range(4), index=list('dabc'))

log("s.sort_index()", s.sort_index())

log("s.sort_values()", s.sort_values())

================================ s.sort_index() ================================ a 1 b 2 c 3 d 0 dtype: int64 =============================== s.sort_values() ================================ d 0 a 1 b 2 c 3 dtype: int64

1.3.2.6 唯一值,计数和成员(unique/count/isin)

s = pd.Series(list('cadaabbcc'))

log("s.unique()", s.unique())

log("s.value_counts()", s.value_counts())

log("s.isin(['b', 'c'])", s.isin(['b', 'c']))

1.3.2.7 排名(rank)

s = pd.Series([7, -5, 7, 4, 2, 0, 4])

log("s.rank()", s.rank())

log("s.rank(method='first')", s.rank(method='first'))

log("s.rank(ascending=False, method='max')", s.rank(ascending=False, method='max'))

=================================== s.rank() =================================== 0 6.5 1 1.0 2 6.5 3 4.5 4 3.0 5 2.0 6 4.5 dtype: float64 ============================ s.rank(method='first') ============================ 0 6.0 1 1.0 2 7.0 3 4.0 4 3.0 5 2.0 6 5.0 dtype: float64 ==================== s.rank(ascending=False, method='max') ===================== 0 2.0 1 7.0 2 2.0 3 4.0 4 5.0 5 6.0 6 4.0 dtype: float64

1.3.2.8 在字典上下文中使用

s = pd.Series([4, 7, -5, 3], index=['d', 'b', 'a', 'c'])

log("'b' in s", 'b' in s)

log("'e' in s", 'e' in s)

log("7 in s", 7 in s)

: =================================== 'b' in s =================================== : True : =================================== 'e' in s =================================== : False : ==================================== 7 in s ==================================== : False

1.3.2.9 检查缺失数据

data = {'Ohio': 35000, 'Texas': 71000, 'Oregon': 16000, 'Utah': 5000}

states = ['California', 'Ohio', 'Oregon', 'Texas']

s = pd.Series(data, index=states)

log("s", s)

log("s.isnull()", s.isnull())

log("s.notnull()", s.notnull())

====================================== s ======================================= California NaN Ohio 35000.0 Oregon 16000.0 Texas 71000.0 dtype: float64 ================================== s.isnull() ================================== California True Ohio False Oregon False Texas False dtype: bool ================================= s.notnull() ================================== California False Ohio True Oregon True Texas True dtype: bool

1.4 DataFrame

DataFrame 是 二维带行索引和列索引的矩阵 。

可以把 DataFrame 想象成一个包含 已排序的列 的集合。

创建 DataFrame 的基本格式是:

pd.DataFrame(data, index=index, columns=columns)

其中 index 是行标签,=columns= 是列标签,=data= 可以是下面的数据:

- 由一维 numpy 数组,list,Series 构成的字典

- 二维 numpy 数组

- 一个 Series

- 另外的 DataFrame 对象

1.4.1 创建

1.4.1.1 从字典创建

key 为 DataFrame 的列; value 为对应列下的值

d = {'one' : pd.Series([1, 2, 3], index=['a', 'b', 'c']),

'two' : pd.Series([1, 2, 3, 4], index=['a', 'b', 'c', 'd'])}

log("pd.DataFrame(d)", pd.DataFrame(d))

log("pd.DataFrame(d, index=['d', 'b', 'a'])", pd.DataFrame(d, index=['d', 'b', 'a']))

log("pd.DataFrame(d, index=['d', 'b', 'a'], columns=['two', 'three'])",

pd.DataFrame(d, index=['d', 'b', 'a'], columns=['two', 'three']))

=============================== pd.DataFrame(d) ================================ one two a 1.0 1 b 2.0 2 c 3.0 3 d NaN 4 ==================== pd.DataFrame(d, index=['d', 'b', 'a']) ==================== one two d NaN 4 b 2.0 2 a 1.0 1 ======= pd.DataFrame(d, index=['d', 'b', 'a'], columns=['two', 'three']) ======= two three d 4 NaN b 2 NaN a 1 NaN

d = {'one' : [1, 2, 3, 4],

'two' : [21, 22, 23, 24]}

log("pd.DataFrame(d)", pd.DataFrame(d))

log("pd.DataFrame(d, index=['a', 'b', 'c', 'd'])", pd.DataFrame(d, index=['a', 'b', 'c', 'd']))

=============================== pd.DataFrame(d) ================================ one two 0 1 21 1 2 22 2 3 23 3 4 24 ================= pd.DataFrame(d, index=['a', 'b', 'c', 'd']) ================== one two a 1 21 b 2 22 c 3 23 d 4 24

df = pd.DataFrame({

'A': 1,

'B': pd.Timestamp('20160301'),

'C': range(4),

'D': np.arange(5, 9),

'E': 'text',

'F': ['AA', 'BB', 'CC', 'DD']})

log("df", df)

====================================== df ====================================== A B C D E F 0 1 2016-03-01 0 5 text AA 1 1 2016-03-01 1 6 text BB 2 1 2016-03-01 2 7 text CC 3 1 2016-03-01 3 8 text DD

1.4.1.2 从结构化数据列表创建

data = [(1, 2.2, 'Hello'), (2, 3., "World")]

log("pd.DataFrame(data)", pd.DataFrame(data))

log("pd.DataFrame(data, index=['first', 'second'], columns=['A', 'B', 'C'])",

pd.DataFrame(data, index=['first', 'second'], columns=['A', 'B', 'C']))

============================== pd.DataFrame(data) ==============================

0 1 2

0 1 2.2 Hello

1 2 3.0 World

==== pd.DataFrame(data, index=['first', 'second'], columns=['A', 'B', 'C']) ====

A B C

first 1 2.2 Hello

second 2 3.0 World

1.4.1.3 从字典列表创建

data = [{'a': 1, 'b': 2}, {'a': 5, 'b': 10, 'c': 20}]

log("pd.DataFrame(data)", pd.DataFrame(data))

log("pd.DataFrame(data, index=['first', 'second'])",

pd.DataFrame(data, index=['first', 'second']))

log("pd.DataFrame(data, columns=['a', 'b'])",

pd.DataFrame(data, columns=['a', 'b']))

============================== pd.DataFrame(data) ==============================

a b c

0 1 2 NaN

1 5 10 20.0

================ pd.DataFrame(data, index=['first', 'second']) =================

a b c

first 1 2 NaN

second 5 10 20.0

==================== pd.DataFrame(data, columns=['a', 'b']) ====================

a b

0 1 2

1 5 10

1.4.1.4 从元组字典创建

实际应用中,会通过数据清洗的方式,把数据整理成方便 Pandas 导入且可读性好的格式。 然后再通过 reindex/groupby 等方式转换成复杂数据结构。

d = {('a', 'b'): {('A', 'B'): 1, ('A', 'C'): 2},

('a', 'a'): {('A', 'C'): 3, ('A', 'B'): 4},

('a', 'c'): {('A', 'B'): 5, ('A', 'C'): 6},

('b', 'a'): {('A', 'C'): 7, ('A', 'B'): 8},

('b', 'b'): {('A', 'D'): 9, ('A', 'B'): 10}}

# 多级标签

log("pd.DataFrame(d)", pd.DataFrame(d))

=============================== pd.DataFrame(d) ================================

a b

b a c a b

A B 1.0 4.0 5.0 8.0 10.0

C 2.0 3.0 6.0 7.0 NaN

D NaN NaN NaN NaN 9.0

1.4.1.5 从 Series 创建

s = pd.Series(np.random.randn(5), index=['a', 'b', 'c', 'd', 'e'])

log("pd.DataFrame(s)", pd.DataFrame(s))

log("pd.DataFrame(s, index=['a', 'c', 'd'])",

pd.DataFrame(s, index=['a', 'c', 'd']))

log("pd.DataFrame(s, index=['a', 'c', 'd'], columns=['A'])",

pd.DataFrame(s, index=['a', 'c', 'd'], columns=['A']))

=============================== pd.DataFrame(s) ================================

0

a 1.052429

b -0.183841

c -0.567050

d -0.946448

e -0.245539

==================== pd.DataFrame(s, index=['a', 'c', 'd']) ====================

0

a 1.052429

c -0.567050

d -0.946448

============ pd.DataFrame(s, index=['a', 'c', 'd'], columns=['A']) =============

A

a 1.052429

c -0.567050

d -0.946448

1.4.1.6 指定行列索引创建

dates = pd.date_range('20160301', periods=6)

log("dates", dates)

df = pd.DataFrame(np.random.randn(6,4), index=dates, columns=list('ABCD'))

log("df", df)

==================================== dates =====================================

DatetimeIndex(['2016-03-01', '2016-03-02', '2016-03-03', '2016-03-04',

'2016-03-05', '2016-03-06'],

dtype='datetime64[ns]', freq='D')

====================================== df ======================================

A B C D

2016-03-01 -0.048170 1.536653 0.286190 -0.105748

2016-03-02 0.212721 -0.019064 -0.235831 -0.123454

2016-03-03 -0.465780 0.908629 0.148821 0.736669

2016-03-04 1.020257 1.094551 0.508659 0.018455

2016-03-05 -0.065958 0.437436 -1.026936 0.028647

2016-03-06 -0.055553 0.853023 -0.155418 0.160215

1.4.2 基本操作

1.4.2.1 转置

data = {'Nevada': {2001: 2.4, 2002: 2.9},

'Ohio': {2001: 1.5, 2001: 1.7, 2002: 3.6}}

df = pd.DataFrame(data)

log("df", df)

log("df.T", df.T)

====================================== df ======================================

Nevada Ohio

2001 2.4 1.7

2002 2.9 3.6

===================================== df.T =====================================

2001 2002

Nevada 2.4 2.9

Ohio 1.7 3.6

1.4.2.2 算数运算填充

df1 = pd.DataFrame(np.arange(12.).reshape((3, 4)),

columns=list('abcd'))

df2 = pd.DataFrame(np.arange(20.).reshape((4, 5)),

columns=list('abcde'))

log("df1", df1)

log("df2", df2)

log("df1 + df2", df1 + df2)

log("df1.add(df2, fill_value=0)", df1.add(df2, fill_value=0))

===================================== df1 ======================================

a b c d

0 0.0 1.0 2.0 3.0

1 4.0 5.0 6.0 7.0

2 8.0 9.0 10.0 11.0

===================================== df2 ======================================

a b c d e

0 0.0 1.0 2.0 3.0 4.0

1 5.0 6.0 7.0 8.0 9.0

2 10.0 11.0 12.0 13.0 14.0

3 15.0 16.0 17.0 18.0 19.0

================================== df1 + df2 ===================================

a b c d e

0 0.0 2.0 4.0 6.0 NaN

1 9.0 11.0 13.0 15.0 NaN

2 18.0 20.0 22.0 24.0 NaN

3 NaN NaN NaN NaN NaN

========================== df1.add(df2, fill_value=0) ==========================

a b c d e

0 0.0 2.0 4.0 6.0 4.0

1 9.0 11.0 13.0 15.0 9.0

2 18.0 20.0 22.0 24.0 14.0

3 15.0 16.0 17.0 18.0 19.0

1.4.2.3 和 Series 之间的运算(广播机制)

默认会将 Series 的索引与 DataFrame 的列进行匹配,并广播到各行:

df = pd.DataFrame(np.arange(12.).reshape((4, 3)),

columns=list('bde'),

index=['Utah', 'Ohio', 'Texas', 'Oregon'])

log("df", df)

s = df.iloc[0]

log("s", s)

log("df - s", df - s)

s2 = pd.Series(range(3), index=['b', 'e', 'f'])

log("df + s2", df + s2)

====================================== df ======================================

b d e

Utah 0.0 1.0 2.0

Ohio 3.0 4.0 5.0

Texas 6.0 7.0 8.0

Oregon 9.0 10.0 11.0

====================================== s =======================================

b 0.0

d 1.0

e 2.0

Name: Utah, dtype: float64

==================================== df - s ====================================

b d e

Utah 0.0 0.0 0.0

Ohio 3.0 3.0 3.0

Texas 6.0 6.0 6.0

Oregon 9.0 9.0 9.0

=================================== df + s2 ====================================

b d e f

Utah 0.0 NaN 3.0 NaN

Ohio 3.0 NaN 6.0 NaN

Texas 6.0 NaN 9.0 NaN

Oregon 9.0 NaN 12.0 NaN

如果想在列上广播,在行上匹配,必须使用 算数方法 中的一种:

s3 = df['d']

log("df.sub(s3, axis='index')", df.sub(s3, axis='index'))

=========================== df.sub(s3, axis='index') ===========================

b d e

Utah -1.0 0.0 1.0

Ohio -1.0 0.0 1.0

Texas -1.0 0.0 1.0

Oregon -1.0 0.0 1.0

1.4.2.4 应用函数

从本质上讲,DataFrame 内部用的数据结构就是 numpy 的 ndarray 。

df = pd.DataFrame(np.random.randn(10, 4), columns=['one', 'two', 'three', 'four'])

log("df", df)

log("np.exp(df)", np.exp(df))

log("np.sin(df)", np.sin(df))

====================================== df ======================================

one two three four

0 0.384419 -0.261265 -0.776319 -1.083965

1 -0.280943 1.203641 0.392169 -2.484636

2 1.101764 0.041095 1.075932 0.543424

3 0.472131 0.070010 -0.199482 -0.140922

4 -0.479721 0.281841 1.163404 0.694684

5 -0.464032 -1.058426 -0.692909 0.244612

6 1.082909 1.101045 -1.151583 -1.061644

7 0.533688 -1.130648 -0.350829 1.444129

8 -0.019973 1.649555 1.037025 0.059973

9 -0.337814 -2.070719 1.277318 -0.913099

================================== np.exp(df) ==================================

one two three four

0 1.468760 0.770077 0.460097 0.338252

1 0.755072 3.332227 1.480187 0.083356

2 3.009471 1.041951 2.932725 1.721893

3 1.603407 1.072519 0.819155 0.868557

4 0.618956 1.325568 3.200812 2.003075

5 0.628743 0.347002 0.500119 1.277125

6 2.953258 3.007307 0.316136 0.345887

7 1.705209 0.322824 0.704104 4.238160

8 0.980226 5.204665 2.820814 1.061808

9 0.713328 0.126095 3.587005 0.401279

================================== np.sin(df) ==================================

one two three four

0 0.375020 -0.258303 -0.700658 -0.883820

1 -0.277262 0.933352 0.382193 -0.610710

2 0.892006 0.041083 0.880033 0.517070

3 0.454785 0.069953 -0.198162 -0.140456

4 -0.461532 0.278124 0.918157 0.640142

5 -0.447558 -0.871585 -0.638778 0.242180

6 0.883325 0.891681 -0.913410 -0.873158

7 0.508712 -0.904688 -0.343677 0.991988

8 -0.019971 0.996900 0.860895 0.059937

9 -0.331425 -0.877620 0.957243 -0.791402

1.4.2.5 行/列映射 (apply)

df.apply(series_to_series_func) -> DataFrame

df.apply(series_to_value_func) -> Series

df = pd.DataFrame(np.arange(12).reshape(4, 3),

index=['one', 'two', 'three', 'four'],

columns=list('ABC'))

log("df", df)

log("df.apply(lambda x: x.max() - x.min())", df.apply(lambda x: x.max() - x.min()))

log("df.apply(lambda x: x.max() - x.min(), axis=1)", df.apply(lambda x: x.max() - x.min(), axis=1))

def min_max(x):

return pd.Series([x.min(), x.max()], index=['min', 'max'])

log("df.apply(min_max, axis=1)", df.apply(min_max, axis=1))

====================================== df ======================================

A B C

one 0 1 2

two 3 4 5

three 6 7 8

four 9 10 11

==================== df.apply(lambda x: x.max() - x.min()) =====================

A 9

B 9

C 9

dtype: int64

================ df.apply(lambda x: x.max() - x.min(), axis=1) =================

one 2

two 2

three 2

four 2

dtype: int64

========================== df.apply(min_max, axis=1) ===========================

min max

one 0 2

two 3 5

three 6 8

four 9 11

1.4.2.6 逐元素映射 (applymap)

df.applymap(value_to_value_func) -> DataFrame

df = pd.DataFrame(np.random.randn(4, 3),

index=['one', 'two', 'three', 'four'],

columns=list('ABC'))

log("df", df)

log("df.applymap(lambda x: '{0:.03f}'.format(x))", df.applymap(lambda x: '{0:.03f}'.format(x)))

1.4.2.7 排序(sort_index/sort_values)

df = pd.DataFrame(np.arange(8).reshape((2, 4)),

index=['three', 'one'],

columns=list('dabc'))

log("df", df)

log("df.sort_index()", df.sort_index())

log("df.sort_index(axis=1)", df.sort_index(axis=1))

log("df.sort_values(by='a')", df.sort_values(by='a'))

log("df.sort_values(by=['a', 'b'])", df.sort_values(by=['a', 'b']))

====================================== df ======================================

d a b c

three 0 1 2 3

one 4 5 6 7

=============================== df.sort_index() ================================

d a b c

one 4 5 6 7

three 0 1 2 3

============================ df.sort_index(axis=1) =============================

a b c d

three 1 2 3 0

one 5 6 7 4

============================ df.sort_values(by='a') ============================

d a b c

three 0 1 2 3

one 4 5 6 7

======================== df.sort_values(by=['a', 'b']) =========================

d a b c

three 0 1 2 3

one 4 5 6 7

1.4.2.8 排名(rank)

df = pd.DataFrame({'b': [4.3, 7, -3, 2], 'a': [0, 1, 0, 1], 'c': [-2, 5, 8, -2.5]})

log("df", df)

log("df.rank(axis='columns')", df.rank(axis='columns'))

====================================== df ======================================

b a c

0 4.3 0 -2.0

1 7.0 1 5.0

2 -3.0 0 8.0

3 2.0 1 -2.5

=========================== df.rank(axis='columns') ============================

b a c

0 3.0 2.0 1.0

1 3.0 1.0 2.0

2 1.0 2.0 3.0

3 3.0 2.0 1.0

1.4.2.9 转换为 ndarray 对象

df = pd.DataFrame(np.random.randn(10, 4), columns=['one', 'two', 'three', 'four'])

ary = np.asarray(df)

log("ary", ary)

log("ary == df.values", ary == df.values)

log("ary == df", ary == df)

===================================== ary ======================================

[[ 2.04624678 -0.6312282 0.67979273 -0.44172316]

[-0.86094589 1.94346553 -2.14098712 -0.59540524]

[-0.15020567 0.89921316 1.57976154 1.28561354]

[ 0.36556931 0.74106876 -1.11107492 -0.0127461 ]

[ 1.0701633 -1.33064105 0.21082171 2.11969444]

[-1.40825621 0.77820317 0.28563787 -0.00318099]

[-0.41136998 0.73250492 1.42237664 -1.03227235]

[-0.05801189 0.2636244 -1.40155875 -1.28585849]

[-1.00331627 0.53425829 -0.37204681 0.34346003]

[-0.39496311 -1.61744328 -0.95510468 -0.5185989 ]]

=============================== ary == df.values ===============================

[[ True True True True]

[ True True True True]

[ True True True True]

[ True True True True]

[ True True True True]

[ True True True True]

[ True True True True]

[ True True True True]

[ True True True True]

[ True True True True]]

================================== ary == df ===================================

one two three four

0 True True True True

1 True True True True

2 True True True True

3 True True True True

4 True True True True

5 True True True True

6 True True True True

7 True True True True

8 True True True True

9 True True True True

1.4.2.10 统计

- count 非 NA 值个数

- describe 计算 Series 或 DataFrame 各列的汇总统计集合

- min, max

- argmin, argmax 最大最小值所在索引位置(整数)

- idxmin, idxmax 最大最小值所在索引标签

- quantile 计算样本从 0 到 1 间的分位数

- sum

- mean

- median

- mad 平均值的平均绝对偏差

- mod 频繁统计

- prod 所有值的积

- var 样本方差

- std 标准差

- skew 样本偏度(第三时刻)值

- kurt 样本峰度(第四时刻)值

- cumsum 累计值

- cummin, cummax

- cumprod

- diff 计算第一个算术差值(对时间序列有用)

- pct_change 计算百分比

2 索引

2.1 Series

2.1.1 设置对象自身和索引的 name 属性

data = {'Ohio': 35000, 'Texas': 71000, 'Oregon': 16000, 'Utah': 5000}

states = ['California', 'Ohio', 'Oregon', 'Texas']

s = pd.Series(data, index=states)

s.name = 'population'

s.index.name = 'state'

log("s", s)

====================================== s ======================================= state California NaN Ohio 35000.0 Oregon 16000.0 Texas 71000.0 Name: population, dtype: float64

2.1.2 获取值和索引

s = pd.Series(np.random.randn(5), index=['a', 'b', 'c', 'd', 'e'])

log("s", s)

log("s.values", s.values)

log("s.index", s.index)

====================================== s ======================================= a 0.007460 b -0.346364 c -1.524387 d -0.389066 e 0.464790 dtype: float64 =================================== s.values =================================== [ 0.00745967 -0.34636371 -1.52438655 -0.38906608 0.46479046] =================================== s.index ==================================== Index(['a', 'b', 'c', 'd', 'e'], dtype='object')

2.1.3 索引对齐

相同索引值才进行操作

s1 = pd.Series(np.random.randint(3, size=3), index=['a', 'c', 'e'])

s2 = pd.Series(np.random.randint(3, size=3), index=['a', 'd', 'e'])

log("s1", s1)

log("s2", s2)

log("s1 + s2", s1 + s2)

====================================== s1 ====================================== a 1 c 0 e 1 dtype: int64 ====================================== s2 ====================================== a 1 d 1 e 0 dtype: int64 =================================== s1 + s2 ==================================== a 2.0 c NaN d NaN e 1.0 dtype: float64

2.1.4 重建索引

s = pd.Series([4.5, 7.2, -5.3, 3.6], index=['d', 'b', 'a', 'c'])

log("s", s)

log("s.reindex(['a', 'b', 'c', 'd', 'e'])", s.reindex(['a', 'b', 'c', 'd', 'e']))

====================================== s ======================================= d 4.5 b 7.2 a -5.3 c 3.6 dtype: float64 ===================== s.reindex(['a', 'b', 'c', 'd', 'e']) ===================== a -5.3 b 7.2 c 3.6 d 4.5 e NaN dtype: float64

重建索引时插值:

s = pd.Series(['blue', 'purple', 'yellow'], index=[0, 2, 4])

log("s", s)

log("s.reindex(range(6), method='ffill')", s.reindex(range(6), method='ffill'))

====================================== s ======================================= 0 blue 2 purple 4 yellow dtype: object ===================== s.reindex(range(6), method='ffill') ====================== 0 blue 1 blue 2 purple 3 purple 4 yellow 5 yellow dtype: object

2.1.5 删除索引

s = pd.Series(np.arange(5.), index=list('abcde'))

log("s", s)

log("s.drop('c')", s.drop('c'))

log("s.drop(['d', 'c'])", s.drop(['d', 'c']))

====================================== s =======================================

a 0.0

b 1.0

c 2.0

d 3.0

e 4.0

dtype: float64

================================= s.drop('c') ==================================

a 0.0

b 1.0

d 3.0

e 4.0

dtype: float64

============================== s.drop(['d', 'c']) ==============================

a 0.0

b 1.0

e 4.0

dtype: float64

2.1.6 标签索引

s = pd.Series([4, 7, -5, 3], index=['a', 'b', 'c', 'd'])

log("s", s)

log("s['a']", s['a'])

s['d'] = 6

log("s[['c', 'a', 'd']]", s[['c', 'a', 'd']])

log("s['b' : 'c']", s['b' : 'c'])

====================================== s ======================================= a 4 b 7 c -5 d 3 dtype: int64 ==================================== s['a'] ==================================== 4 ============================== s[['c', 'a', 'd']] ============================== c -5 a 4 d 6 dtype: int64 ================================= s['b' : 'c'] ================================= b 7 c -5 dtype: int64

2.1.7 布尔索引

s = pd.Series([4, 7, -5, 3], index=['d', 'b', 'a', 'c'])

log("s[s > 0]", s[s > 0])

=================================== s[s > 0] =================================== d 4 b 7 c 3 dtype: int64

2.2 DataFrame

2.2.1 设置行/列索引的 name 属性

data = {'Nevada': {2001: 2.4, 2002: 2.9},

'Ohio': {2001: 1.5, 2001: 1.7, 2002: 3.6}}

df = pd.DataFrame(data)

df.index.name = 'year'

df.columns.name = 'state'

log("df", df)

====================================== df ====================================== state Nevada Ohio year 2001 2.4 1.7 2002 2.9 3.6

2.2.2 重建索引

在 DataFrame 中, reindex 可以改变行索引,列索引,也可以同时改变两者。

fill method 只对行重新索引有效,不适用列。

df = pd.DataFrame(np.arange(9).reshape((3,3)),

index=list('acd'),

columns=['Ohio', 'Texas', 'California'])

log("df", df)

# 重建行索引

log("df.reindex(['a', 'b', 'c', 'd'])", df.reindex(['a', 'b', 'c', 'd']))

# 重建列索引

states = ['Texas', 'Utah', 'California']

log("df.reindex(columns=states)", df.reindex(columns=states))

====================================== df ====================================== Ohio Texas California a 0 1 2 c 3 4 5 d 6 7 8 ======================= df.reindex(['a', 'b', 'c', 'd']) ======================= Ohio Texas California a 0.0 1.0 2.0 b NaN NaN NaN c 3.0 4.0 5.0 d 6.0 7.0 8.0 ========================== df.reindex(columns=states) ========================== Texas Utah California a 1 NaN 2 c 4 NaN 5 d 7 NaN 8

2.2.3 索引对齐

DataFrame 在进行数据计算时, 会自动按行和列进行数据对齐 。 最终的计算结果会合并两个 DataFrame 。

df1 = pd.DataFrame(np.arange(9.).reshape((3, 3)),

columns=list('bcd'),

index=['Ohio', 'Texas', 'Colorado'])

df2 = pd.DataFrame(np.arange(12.).reshape((4, 3)),

columns=list('bde'),

index=['Utah', 'Ohio', 'Texas', 'Oregon'])

log("df1", df1)

log("df2", df2)

log("df1 + df2", df1 + df2)

2.2.4 列赋值

当将列表或数组赋值给一个列时,长度必须和 DataFrame 的长度相匹配。

data = {'state': ['Ohio', 'Ohio', 'Ohio', 'Nevada', 'Nevada', 'Nevada'],

'year': [2000, 2001, 2002, 2001, 2002, 2003],

'pop': [1.5, 1.7, 3.6, 2.4, 2.9, 3.2]}

columns = ['year', 'state', 'pop']

index = ['one', 'two', 'three', 'four', 'five', 'six']

df = pd.DataFrame(data, columns=columns, index=index)

df['debt'] = 16.5 # 标量赋值

df['income'] = np.arange(6.) # 数组赋值

log("df", df)

====================================== df ======================================

year state pop debt income

one 2000 Ohio 1.5 16.5 0.0

two 2001 Ohio 1.7 16.5 1.0

three 2002 Ohio 3.6 16.5 2.0

four 2001 Nevada 2.4 16.5 3.0

five 2002 Nevada 2.9 16.5 4.0

six 2003 Nevada 3.2 16.5 5.0

将 Series 赋值给一列时,Series 的索引会按照 DataFrame 的索引重新排列。

val = pd.Series([-1.2, -1.5, -1.7], index=['two', 'four', 'five'])

df['debt'] = val

log("df", df)

====================================== df ======================================

year state pop debt income

one 2000 Ohio 1.5 NaN 0.0

two 2001 Ohio 1.7 -1.2 1.0

three 2002 Ohio 3.6 NaN 2.0

four 2001 Nevada 2.4 -1.5 3.0

five 2002 Nevada 2.9 -1.7 4.0

six 2003 Nevada 3.2 NaN 5.0

2.2.5 删除行/列

data = {'state': ['Ohio', 'Ohio', 'Ohio', 'Nevada', 'Nevada', 'Nevada'],

'year': [2000, 2001, 2002, 2001, 2002, 2003],

'pop': [1.5, 1.7, 3.6, 2.4, 2.9, 3.2]}

columns = ['year', 'state', 'pop']

index = ['one', 'two', 'three', 'four', 'five', 'six']

df = pd.DataFrame(data, columns=columns, index=index)

del df['pop']

yearSeries = df.pop('year')

df.drop('state', axis='columns', inplace=True)

log("df", df)

====================================== df ====================================== Empty DataFrame Columns: [] Index: [one, two, three, four, five, six]

df = pd.DataFrame(np.arange(16).reshape((4, 4)),

index=["Ohio", "Colorado", "Utah", "New York"],

columns=["one", "two", "three", "four"])

log("df", df)

log("df.drop('Colorado')", df.drop('Colorado')) # 删除行

log("df.drop('two', axis='columns')", df.drop('two', axis='columns'))

====================================== df ======================================

one two three four

Ohio 0 1 2 3

Colorado 4 5 6 7

Utah 8 9 10 11

New York 12 13 14 15

============================= df.drop('Colorado') ==============================

one two three four

Ohio 0 1 2 3

Utah 8 9 10 11

New York 12 13 14 15

======================== df.drop('two', axis='columns') ========================

one three four

Ohio 0 2 3

Colorado 4 6 7

Utah 8 10 11

New York 12 14 15

2.2.6 标签索引

从 DataFrame 中玄虚的列是数据的 视图 ,而不是拷贝。

如果需要复制,应当显式地使用 Series 的 copy 方法。

返回的 Series 与原 DataFrame 有相同的索引,且 Series 的 name 属性也会被合理地设置。

data = {'state': ['Ohio', 'Ohio', 'Ohio', 'Nevada', 'Nevada', 'Nevada'],

'year': [2000, 2001, 2002, 2001, 2002, 2003],

'pop': [1.5, 1.7, 3.6, 2.4, 2.9, 3.2]}

columns = ['year', 'state', 'pop']

df = pd.DataFrame(data, columns=columns)

log("df", df)

log("df['state']", df['state'])

log("df.year", df.year)

log("df[['state', 'pop']]", df[['state', 'pop']])

====================================== df ======================================

year state pop

0 2000 Ohio 1.5

1 2001 Ohio 1.7

2 2002 Ohio 3.6

3 2001 Nevada 2.4

4 2002 Nevada 2.9

5 2003 Nevada 3.2

================================= df['state'] ==================================

0 Ohio

1 Ohio

2 Ohio

3 Nevada

4 Nevada

5 Nevada

Name: state, dtype: object

=================================== df.year ====================================

0 2000

1 2001

2 2002

3 2001

4 2002

5 2003

Name: year, dtype: int64

============================= df[['state', 'pop']] =============================

state pop

0 Ohio 1.5

1 Ohio 1.7

2 Ohio 3.6

3 Nevada 2.4

4 Nevada 2.9

5 Nevada 3.2

2.2.7 正则索引

df = pd.DataFrame(np.random.randn(6, 4),

index=list('ABCDEF'),

columns=['one', 'two', 'three', 'four'])

log("df", df)

log("df.filter(regex=r'^t.*$')", df.filter(regex=r'^t.*$'))

====================================== df ======================================

one two three four

A 0.266558 0.390929 0.381822 -0.662022

B 0.947612 1.492351 1.824414 -0.682042

C 0.920167 -0.387809 -1.606654 -0.692762

D -0.491672 0.135303 1.653127 0.036277

E -0.922068 0.128126 -1.823203 0.054199

F -0.023060 -0.725380 0.062327 -0.608580

========================== df.filter(regex=r'^t.*$') ===========================

two three

A 0.390929 0.381822

B 1.492351 1.824414

C -0.387809 -1.606654

D 0.135303 1.653127

E 0.128126 -1.823203

F -0.725380 0.062327

2.2.8 整数索引

df = pd.DataFrame(np.arange(16).reshape((4, 4)),

index=["Ohio", "Colorado", "Utah", "New York"],

columns=["one", "two", "three", "four"])

log("df", df)

log("df[:2]", df[:2])

====================================== df ======================================

one two three four

Ohio 0 1 2 3

Colorado 4 5 6 7

Utah 8 9 10 11

New York 12 13 14 15

==================================== df[:2] ====================================

one two three four

Ohio 0 1 2 3

Colorado 4 5 6 7

2.2.9 布尔索引

df = pd.DataFrame(np.arange(16).reshape((4, 4)),

index=["Ohio", "Colorado", "Utah", "New York"],

columns=["one", "two", "three", "four"])

log("df", df)

log("df[df['three'] > 5]", df[df['three'] > 5])

====================================== df ======================================

one two three four

Ohio 0 1 2 3

Colorado 4 5 6 7

Utah 8 9 10 11

New York 12 13 14 15

============================= df[df['three'] > 5] ==============================

one two three four

Colorado 4 5 6 7

Utah 8 9 10 11

New York 12 13 14 15

2.2.10 loc 索引

df = pd.DataFrame(np.arange(16).reshape((4, 4)),

index=["Ohio", "Colorado", "Utah", "New York"],

columns=["one", "two", "three", "four"])

log("df", df)

log("df.loc['Colorado', ['two', 'three']]", df.loc['Colorado', ['two', 'three']])

log("df.loc[:'Utah', 'two']", df.loc[:'Utah', 'two'])

====================================== df ======================================

one two three four

Ohio 0 1 2 3

Colorado 4 5 6 7

Utah 8 9 10 11

New York 12 13 14 15

===================== df.loc['Colorado', ['two', 'three']] =====================

two 5

three 6

Name: Colorado, dtype: int64

============================ df.loc[:'Utah', 'two'] ============================

Ohio 1

Colorado 5

Utah 9

Name: two, dtype: int64

2.2.11 iloc 索引

df = pd.DataFrame(np.arange(16).reshape((4, 4)),

index=["Ohio", "Colorado", "Utah", "New York"],

columns=["one", "two", "three", "four"])

log("df", df)

log("df.iloc[2, [3, 0, 1]]", df.iloc[2, [3, 0, 1]])

log("df.iloc[[1, 2], [3, 0, 1]]", df.iloc[[1, 2], [3, 0, 1]])

log("df.iloc[:, :3][df.three > 5]", df.iloc[:, :3][df.three > 5])

====================================== df ======================================

one two three four

Ohio 0 1 2 3

Colorado 4 5 6 7

Utah 8 9 10 11

New York 12 13 14 15

============================ df.iloc[2, [3, 0, 1]] =============================

four 11

one 8

two 9

Name: Utah, dtype: int64

========================== df.iloc[[1, 2], [3, 0, 1]] ==========================

four one two

Colorado 7 4 5

Utah 11 8 9

========================= df.iloc[:, :3][df.three > 5] =========================

one two three

Colorado 4 5 6

Utah 8 9 10

New York 12 13 14

2.2.12 at 索引

df = pd.DataFrame(np.random.randn(6, 4),

index=list('ABCDEF'),

columns=['one', 'two', 'three', 'four'])

log("df", df)

log("df.at['A', 'one']", df.at['A', 'one'])

2.2.13 随机索引

df = pd.DataFrame(np.arange(16).reshape((4, 4)),

index=["Ohio", "Colorado", "Utah", "New York"],

columns=["one", "two", "three", "four"])

log("df", df)

log("df.sample(frac=0.5)", df.sample(frac=0.5)) # Randomly

log("df.sample(n=3)", df.sample(n=3)) # Randomly

====================================== df ======================================

one two three four

Ohio 0 1 2 3

Colorado 4 5 6 7

Utah 8 9 10 11

New York 12 13 14 15

============================= df.sample(frac=0.5) ==============================

one two three four

Ohio 0 1 2 3

New York 12 13 14 15

================================ df.sample(n=3) ================================

one two three four

Colorado 4 5 6 7

Ohio 0 1 2 3

Utah 8 9 10 11

2.2.14 重命名行/列

df = pd.DataFrame(np.random.randn(6, 4),

index=list('ABCDEF'),

columns=['one', 'two', 'three', 'four'])

log("origin df", df)

df.rename(columns={'three': 'san'}, inplace=True)

log("df (column index renamed)", df)

df.rename(index={'C': 'ccc'}, inplace=True)

log("df (index renamed)", df)

================================== origin df ===================================

one two three four

A -1.293186 -1.303202 -0.434815 1.157079

B 0.559491 1.651170 1.130642 0.372430

C 0.087382 0.948737 0.103419 -0.364204

D 0.363415 0.463077 -1.130338 -1.252423

E -0.570689 -1.141226 0.144087 -0.297187

F 1.028409 1.627355 -1.264463 -0.109870

========================== df (column index renamed) ===========================

one two san four

A -1.293186 -1.303202 -0.434815 1.157079

B 0.559491 1.651170 1.130642 0.372430

C 0.087382 0.948737 0.103419 -0.364204

D 0.363415 0.463077 -1.130338 -1.252423

E -0.570689 -1.141226 0.144087 -0.297187

F 1.028409 1.627355 -1.264463 -0.109870

============================== df (index renamed) ==============================

one two san four

A -1.293186 -1.303202 -0.434815 1.157079

B 0.559491 1.651170 1.130642 0.372430

ccc 0.087382 0.948737 0.103419 -0.364204

D 0.363415 0.463077 -1.130338 -1.252423

E -0.570689 -1.141226 0.144087 -0.297187

F 1.028409 1.627355 -1.264463 -0.109870

3 数据清洗

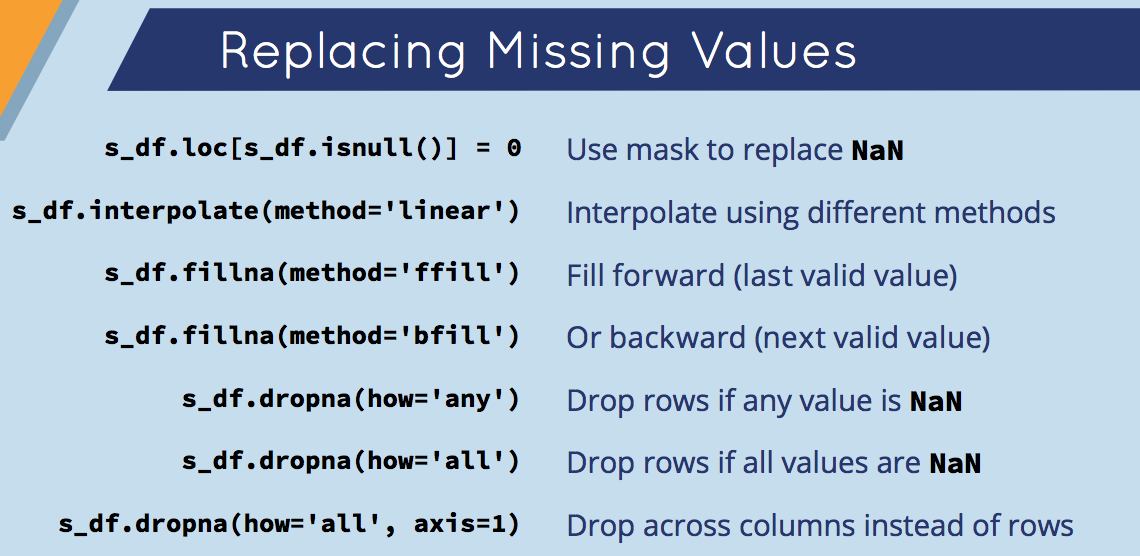

3.1 处理缺失值

pandas 对象的所有统计信息默认情况下是 排除 缺失值的。

Python 内建的 None 值也被当做 NA 处理。

3.1.1 过滤缺失值(dropna)

s = pd.Series([1, np.nan, 3.5, np.nan, 7])

log("s.dropna()", s.dropna()) # 等价于 s[s.notnull()]

================================== s.dropna() ================================== 0 1.0 2 3.5 4 7.0 dtype: float64

当处理 DataFrame 对象时, dropna 默认情况下删除包含缺失值的行 :

(使用 axis = 1 删除列)

df = pd.DataFrame([

[1, 6.5, 3],

[1, np.nan, np.nan],

[np.nan, np.nan, np.nan],

[np.nan, 6.5, 3]

])

log("df", df)

log("df.dropna()", df.dropna())

====================================== df ======================================

0 1 2

0 1.0 6.5 3.0

1 1.0 NaN NaN

2 NaN NaN NaN

3 NaN 6.5 3.0

================================= df.dropna() ==================================

0 1 2

0 1.0 6.5 3.0

当传入 how = 'all' 时, 删除所有值均为 NA 的那些行 :

log("df.dropna(how='all')", df.dropna(how='all'))

使用 thresh 参数来保留 包含一定数量观察值 的行:

df = pd.DataFrame(np.random.randn(7, 3))

df.iloc[:4, 1] = np.nan

df.iloc[:2, 2] = np.nan

log("df", df)

log("df.dropna()", df.dropna())

log("df.dropna(thresh=2)", df.dropna(thresh=2))

====================================== df ======================================

0 1 2

0 -0.215937 NaN NaN

1 -1.358234 NaN NaN

2 0.331335 NaN -0.901148

3 -0.466495 NaN 2.392151

4 -0.178190 0.446226 0.710607

5 -0.446093 -0.317979 -0.601814

6 0.550952 1.036072 -1.812287

================================= df.dropna() ==================================

0 1 2

4 -0.178190 0.446226 0.710607

5 -0.446093 -0.317979 -0.601814

6 0.550952 1.036072 -1.812287

============================= df.dropna(thresh=2) ==============================

0 1 2

2 0.331335 NaN -0.901148

3 -0.466495 NaN 2.392151

4 -0.178190 0.446226 0.710607

5 -0.446093 -0.317979 -0.601814

6 0.550952 1.036072 -1.812287

3.1.2 补全缺失值(fillna)

df = pd.DataFrame(np.random.randn(7, 3))

df.iloc[:4, 1] = np.nan

df.iloc[:2, 2] = np.nan

log("df", df)

log("df.fillna(0)", df.fillna(0))

====================================== df ======================================

0 1 2

0 0.997841 NaN NaN

1 -0.554321 NaN NaN

2 -0.804640 NaN 0.838684

3 0.666262 NaN -1.009344

4 0.049296 -0.091335 -0.724490

5 0.172516 -0.255515 0.760672

6 3.210886 1.221200 0.913991

================================= df.fillna(0) =================================

0 1 2

0 0.997841 0.000000 0.000000

1 -0.554321 0.000000 0.000000

2 -0.804640 0.000000 0.838684

3 0.666262 0.000000 -1.009344

4 0.049296 -0.091335 -0.724490

5 0.172516 -0.255515 0.760672

6 3.210886 1.221200 0.913991

调用 fillna 时使用字典,可以 为不同列设定不同的填充值 :

log("df.fillna({1: 0.5, 2: 0})", df.fillna({1: 0.5, 2: 0}))

========================== df.fillna({1: 0.5, 2: 0}) ===========================

0 1 2

0 0.997841 0.500000 0.000000

1 -0.554321 0.500000 0.000000

2 -0.804640 0.500000 0.838684

3 0.666262 0.500000 -1.009344

4 0.049296 -0.091335 -0.724490

5 0.172516 -0.255515 0.760672

6 3.210886 1.221200 0.913991

使用 插值方法 :

df = pd.DataFrame(np.random.randn(6, 3))

df.iloc[2:, 1] = np.nan

df.iloc[4:, 2] = np.nan

log("df", df)

log("df.fillna(method='ffill')", df.fillna(method='ffill'))

log("df.fillna(method='ffill', limit=2)", df.fillna(method='ffill', limit=2))

====================================== df ======================================

0 1 2

0 0.663103 0.176675 -1.293323

1 -0.108963 0.912126 0.806356

2 0.215125 NaN -1.686062

3 -0.411193 NaN -0.738710

4 -0.038864 NaN NaN

5 -0.561398 NaN NaN

========================== df.fillna(method='ffill') ===========================

0 1 2

0 0.663103 0.176675 -1.293323

1 -0.108963 0.912126 0.806356

2 0.215125 0.912126 -1.686062

3 -0.411193 0.912126 -0.738710

4 -0.038864 0.912126 -0.738710

5 -0.561398 0.912126 -0.738710

====================== df.fillna(method='ffill', limit=2) ======================

0 1 2

0 0.663103 0.176675 -1.293323

1 -0.108963 0.912126 0.806356

2 0.215125 0.912126 -1.686062

3 -0.411193 0.912126 -0.738710

4 -0.038864 NaN -0.738710

5 -0.561398 NaN -0.738710

3.2 数据转换

3.2.1 删除重复值

df = pd.DataFrame(

{

"k1": ['one', 'two'] * 3 + ['two'],

"k2": [1, 1, 2, 3, 3, 4, 4]

}

)

log("df", df)

log("df.duplicated()", df.duplicated())

====================================== df ======================================

k1 k2

0 one 1

1 two 1

2 one 2

3 two 3

4 one 3

5 two 4

6 two 4

=============================== df.duplicated() ================================

0 False

1 False

2 False

3 False

4 False

5 False

6 True

dtype: bool

drop_duplicates 返回的是 DataFrame ,内容是 duplicated 返回 Series 中为 False 的部分:

log("df.drop_duplicates()", df.drop_duplicates())

============================= df.drop_duplicates() =============================

k1 k2

0 one 1

1 two 1

2 one 2

3 two 3

4 one 3

5 two 4

基于某列 去除重复值:

df['v1'] = range(7)

log("df", df)

log("df.drop_duplicates(['k1'])", df.drop_duplicates(['k1']))

====================================== df ======================================

k1 k2 v1

0 one 1 0

1 two 1 1

2 one 2 2

3 two 3 3

4 one 3 4

5 two 4 5

6 two 4 6

========================== df.drop_duplicates(['k1']) ==========================

k1 k2 v1

0 one 1 0

1 two 1 1

drop_duplicates 默认保留第一个观测值,传入参数 keep = 'last' 将返回最后一个:

log("df.drop_duplicates(['k1', 'k2'], keep='last')", df.drop_duplicates(['k1', 'k2'], keep='last'))

================ df.drop_duplicates(['k1', 'k2'], keep='last') =================

k1 k2 v1

0 one 1 0

1 two 1 1

2 one 2 2

3 two 3 3

4 one 3 4

6 two 4 6

3.2.2 使用函数或映射进行数据转换

df = pd.DataFrame(

{

"food": ['bacon',

'pulled pork',

'bacon',

'Pastrami',

'corned beef',

'Bacon',

'pastrami',

'honey ham',

'nova lox'],

"ounces": [4, 3, 12, 6, 7.5, 8, 3, 5, 6]

}

)

meat_to_animal = {

"bacon": 'pig',

"pulled pork": 'pig',

"pastrami": 'cow',

"corned beef": 'cow',

"honey ham": 'pig',

"nova lox": 'salmon'

}

log("df", df)

# 将值转换为小写

lowercased = df['food'].str.lower()

log("lowercased", lowercased)

df['animal'] = lowercased.map(meat_to_animal)

log("df", df)

# 也可以 data['food'].map(lambda x: meat_to_animal[x.lower()])

====================================== df ======================================

food ounces

0 bacon 4.0

1 pulled pork 3.0

2 bacon 12.0

3 Pastrami 6.0

4 corned beef 7.5

5 Bacon 8.0

6 pastrami 3.0

7 honey ham 5.0

8 nova lox 6.0

================================== lowercased ==================================

0 bacon

1 pulled pork

2 bacon

3 pastrami

4 corned beef

5 bacon

6 pastrami

7 honey ham

8 nova lox

Name: food, dtype: object

====================================== df ======================================

food ounces animal

0 bacon 4.0 pig

1 pulled pork 3.0 pig

2 bacon 12.0 pig

3 Pastrami 6.0 cow

4 corned beef 7.5 cow

5 Bacon 8.0 pig

6 pastrami 3.0 cow

7 honey ham 5.0 pig

8 nova lox 6.0 salmon

3.2.3 替代值(replace)

使用 fillna() 是通用值替换的特殊案例。

使用 map() 可以用来修改一个数据中的值,但是 replace() 提供了 更为简单 的实现。

s = pd.Series([1, -999, 2, -999, -1000, 3])

log("s", s)

log("s.replace(-999, np.nan)", s.replace(-999, np.nan))

====================================== s ======================================= 0 1 1 -999 2 2 3 -999 4 -1000 5 3 dtype: int64 =========================== s.replace(-999, np.nan) ============================ 0 1.0 1 NaN 2 2.0 3 NaN 4 -1000.0 5 3.0 dtype: float64

如果想要 一次替代多个值 ,可以传入一个列表:

log("s.replace([-999, -1000], np.nan)", s.replace([-999, -1000], np.nan))

======================= s.replace([-999, -1000], np.nan) ======================= 0 1.0 1 NaN 2 2.0 3 NaN 4 NaN 5 3.0 dtype: float64

将不同的值替换为不同的值 :

log("s.replace([-999, -1000], [np.nan, 0])", s.replace([-999, -1000], [np.nan, 0]))

log("s.replace({-999: np.nan, -1000: 0})", s.replace({-999: np.nan, -1000: 0}))

==================== s.replace([-999, -1000], [np.nan, 0]) =====================

0 1.0

1 NaN

2 2.0

3 NaN

4 0.0

5 3.0

dtype: float64

===================== s.replace({-999: np.nan, -1000: 0}) ======================

0 1.0

1 NaN

2 2.0

3 NaN

4 0.0

5 3.0

dtype: float64

3.2.4 重命名索引

df = pd.DataFrame(np.arange(12).reshape((3,4)),

index=['Ohio', 'Colorado', 'New York'],

columns=['one', 'two', 'three', 'four'])

df.index = df.index.map(lambda x: x[:4].upper())

log("df", df)

log("df.rename(index=str.title, columns=str.upper)", df.rename(index=str.title, columns=str.upper))

log("df.rename(index={'OHIO': 'INDIANA'}, columns={'three': 'peekaboo'})", df.rename(index={'OHIO': 'INDIANA'}, columns={'three': 'peekaboo'}))

====================================== df ======================================

one two three four

OHIO 0 1 2 3

COLO 4 5 6 7

NEW 8 9 10 11

================ df.rename(index=str.title, columns=str.upper) =================

ONE TWO THREE FOUR

Ohio 0 1 2 3

Colo 4 5 6 7

New 8 9 10 11

===== df.rename(index={'OHIO': 'INDIANA'}, columns={'three': 'peekaboo'}) ======

one two peekaboo four

INDIANA 0 1 2 3

COLO 4 5 6 7

NEW 8 9 10 11

3.2.5 离散化和分箱(cut/qcut)

连续值经常需要离散化,或者分离成 箱子 进行分析。

ages = [20, 22, 25, 27, 21, 23, 37, 31, 61, 45, 41, 32]

bins = [18, 25, 35, 60, 100]

cats = pd.cut(ages, bins)

log("cats", cats)

log("cats.codes", cats.codes)

log("cats.categories", cats.categories)

log("cats.value_counts()", cats.value_counts())

===================================== cats =====================================

[(18, 25], (18, 25], (18, 25], (25, 35], (18, 25], ..., (25, 35], (60, 100], (35, 60], (35, 60], (25, 35]]

Length: 12

Categories (4, interval[int64]): [(18, 25] < (25, 35] < (35, 60] < (60, 100]]

================================== cats.codes ==================================

[0 0 0 1 0 0 2 1 3 2 2 1]

=============================== cats.categories ================================

IntervalIndex([(18, 25], (25, 35], (35, 60], (60, 100]]

closed='right',

dtype='interval[int64]')

============================= cats.value_counts() ==============================

(18, 25] 5

(25, 35] 3

(35, 60] 3

(60, 100] 1

dtype: int64

通过传递 right = False 来改变区间哪一边是封闭的。

自定义箱名 :

group_names = ['Youth', 'YoungAdult', 'MiddleAged', 'Senior']

log("pd.cut(ages, bins, labels=group_names)", pd.cut(ages, bins, labels=group_names))

==================== pd.cut(ages, bins, labels=group_names) ==================== [Youth, Youth, Youth, YoungAdult, Youth, ..., YoungAdult, Senior, MiddleAged, MiddleAged, YoungAdult] Length: 12 Categories (4, object): [Youth < YoungAdult < MiddleAged < Senior]

若 使用分箱的个数代替箱边 , pandas 将根据数据中的最小值和最大值计算出等长的箱:

data = np.random.rand(20)

# precision 表示将十进制精度限制在两位

log("pd.cut(data, 4, precision=2)", pd.cut(data, 4, precision=2))

========================= pd.cut(data, 4, precision=2) ========================= [(0.5, 0.73], (0.5, 0.73], (0.73, 0.97], (0.26, 0.5], (0.024, 0.26], ..., (0.024, 0.26], (0.26, 0.5], (0.73, 0.97], (0.5, 0.73], (0.73, 0.97]] Length: 20 Categories (4, interval[float64]): [(0.024, 0.26] < (0.26, 0.5] < (0.5, 0.73] < (0.73, 0.97]]

使用 cut 通常不会使每个箱具有相同的数据量。

qcut 基于样本分位数进行分箱,可以获得 等长的箱 :

data = np.random.randn(1000)

qcats = pd.qcut(data, 4)

log("qcats.value_counts()", qcats.value_counts())

============================= qcats.value_counts() ============================= (-3.532, -0.671] 250 (-0.671, 0.0582] 250 (0.0582, 0.744] 250 (0.744, 3.077] 250 dtype: int64

自定义分位数 :(0 和 1 之间的数字)

qcuts = pd.qcut(data, [0, 0.1, 0.5, 0.9, 1])

log("qcuts.value_counts()", qcuts.value_counts())

============================= qcuts.value_counts() ============================= (-3.532, -1.302] 100 (-1.302, 0.0582] 400 (0.0582, 1.374] 400 (1.374, 3.077] 100 dtype: int64

3.2.6 检测异常值(any)

df = pd.DataFrame(np.random.randn(5, 4))

log("df", df)

log("np.abs(df) > 1", np.abs(df) > 1)

log("(np.abs(df) > 1).any()", (np.abs(df) > 1).any())

log("(np.abs(df) > 1).any(axis=1)", (np.abs(df) > 1).any(axis=1))

log("(np.abs(df) > 1).any().any()", (np.abs(df) > 1).any().any())

====================================== df ======================================

0 1 2 3

0 0.634347 -0.504562 0.337217 0.191453

1 -0.378714 -1.212778 -0.194362 -2.113623

2 -1.422029 0.532520 -0.348306 0.059880

3 -1.184722 -0.297884 -0.620028 0.378589

4 0.846286 -0.714991 -0.127334 -0.089754

================================ np.abs(df) > 1 ================================

0 1 2 3

0 False False False False

1 False True False True

2 True False False False

3 True False False False

4 False False False False

============================ (np.abs(df) > 1).any() ============================

0 True

1 True

2 False

3 True

dtype: bool

========================= (np.abs(df) > 1).any(axis=1) =========================

0 False

1 True

2 True

3 True

4 False

dtype: bool

========================= (np.abs(df) > 1).any().any() =========================

True

3.2.7 随机重排序(sample/take)

df = pd.DataFrame(np.arange(5*4).reshape((5, 4)))

sampler = np.random.permutation(5)

log("df", df)

log("sampler", sampler)

log("df.take(sampler)", df.take(sampler)) # 和 iloc 类似

log("df.sample(3)", df.sample(3))

log("df.sample(3, replace=True)", df.sample(3, replace=True)) # 允许有重复

====================================== df ======================================

0 1 2 3

0 0 1 2 3

1 4 5 6 7

2 8 9 10 11

3 12 13 14 15

4 16 17 18 19

=================================== sampler ====================================

[2 3 0 1 4]

=============================== df.take(sampler) ===============================

0 1 2 3

2 8 9 10 11

3 12 13 14 15

0 0 1 2 3

1 4 5 6 7

4 16 17 18 19

================================= df.sample(3) =================================

0 1 2 3

2 8 9 10 11

0 0 1 2 3

1 4 5 6 7

========================== df.sample(3, replace=True) ==========================

0 1 2 3

3 12 13 14 15

3 12 13 14 15

0 0 1 2 3

3.2.7.1 使用 take 优化内存

values = pd.Series([0, 1, 0, 0] * 2)

dim = pd.Series(['apple', 'orange'])

log("values", values)

log("dim", dim)

log("dim.take(values)", dim.take(values))

============================================== values ============================================== 0 0 1 1 2 0 3 0 4 0 5 1 6 0 7 0 dtype: int64 =============================================== dim ================================================ 0 apple 1 orange dtype: object ========================================= dim.take(values) ========================================= 0 apple 1 orange 0 apple 0 apple 0 apple 1 orange 0 apple 0 apple dtype: object

3.2.8 指标矩阵(get_dummies)

如果一列有 k 个不同的值,可以衍生一个k 列的,值为 0 或 1 的矩阵。

df = pd.DataFrame({'key': list('bbacab'), 'data': range(6)})

log("df", df)

log("pd.get_dummies(df['key'])", pd.get_dummies(df['key']))

====================================== df ====================================== key data 0 b 0 1 b 1 2 a 2 3 c 3 4 a 4 5 b 5 ========================== pd.get_dummies(df['key']) =========================== a b c 0 0 1 0 1 0 1 0 2 1 0 0 3 0 0 1 4 1 0 0 5 0 1 0

添加前缀:

dummies = pd.get_dummies(df['key'], prefix='key')

log("dummies", dummies)

df_with_dummies = df[['data']].join(dummies)

log("df_with_dummies", df_with_dummies)

=================================== dummies ==================================== key_a key_b key_c 0 0 1 0 1 0 1 0 2 1 0 0 3 0 0 1 4 1 0 0 5 0 1 0 =============================== df_with_dummies ================================ data key_a key_b key_c 0 0 0 1 0 1 1 0 1 0 2 2 1 0 0 3 3 0 0 1 4 4 1 0 0 5 5 0 1 0

get_dummies 与 cut 等离散化函数结合使用是 统计操作中一个有用的方法 :

np.random.seed(12345)

values = np.random.rand(10)

log("values", values)

bins = [0, 0.2, 0.4, 0.6, 0.8, 1]

log("pd.get_dummies(pd.cut(values, bins))", pd.get_dummies(pd.cut(values, bins)))

==================================== values ==================================== [0.92961609 0.31637555 0.18391881 0.20456028 0.56772503 0.5955447 0.96451452 0.6531771 0.74890664 0.65356987] ===================== pd.get_dummies(pd.cut(values, bins)) ===================== (0.0, 0.2] (0.2, 0.4] (0.4, 0.6] (0.6, 0.8] (0.8, 1.0] 0 0 0 0 0 1 1 0 1 0 0 0 2 1 0 0 0 0 3 0 1 0 0 0 4 0 0 1 0 0 5 0 0 1 0 0 6 0 0 0 0 1 7 0 0 0 1 0 8 0 0 0 1 0 9 0 0 0 1 0

3.2.9 向量化函数

3.2.9.1 str

为序列添加字符串操作方法,并能跳过 NA

data = {

'Dave': 'dave@google.com',

"Steve": 'steve@gmail.com',

"Rob": 'rob@gmail.com',

"Wes": np.nan

}

s = pd.Series(data)

log("s", s)

log("s.str.contains('gmail')", s.str.contains('gmail'))

====================================== s =======================================

Dave dave@google.com

Steve steve@gmail.com

Rob rob@gmail.com

Wes NaN

dtype: object

=========================== s.str.contains('gmail') ============================

Dave False

Steve True

Rob True

Wes NaN

dtype: object

3.2.9.2 dt

为序列添加时间操作方法

t = pd.date_range('2000-01-01', periods=10)

s = pd.Series(t)

s[3] = np.nan

log("s", s)

log("s.dt.minute", s.dt.minute)

log("s.dt.dayofyear", s.dt.dayofyear)

log("s.dt.weekday", s.dt.weekday)

log("s.dt.weekday_name", s.dt.weekday_name)

log("s.dt.days_in_month", s.dt.days_in_month)

log("s.dt.is_month_start", s.dt.is_month_start)

====================================== s ======================================= 0 2000-01-01 1 2000-01-02 2 2000-01-03 3 NaT 4 2000-01-05 5 2000-01-06 6 2000-01-07 7 2000-01-08 8 2000-01-09 9 2000-01-10 dtype: datetime64[ns] ================================= s.dt.minute ================================== 0 0.0 1 0.0 2 0.0 3 NaN 4 0.0 5 0.0 6 0.0 7 0.0 8 0.0 9 0.0 dtype: float64 ================================ s.dt.dayofyear ================================ 0 1.0 1 2.0 2 3.0 3 NaN 4 5.0 5 6.0 6 7.0 7 8.0 8 9.0 9 10.0 dtype: float64 ================================= s.dt.weekday ================================= 0 5.0 1 6.0 2 0.0 3 NaN 4 2.0 5 3.0 6 4.0 7 5.0 8 6.0 9 0.0 dtype: float64 ============================== s.dt.weekday_name =============================== 0 Saturday 1 Sunday 2 Monday 3 NaN 4 Wednesday 5 Thursday 6 Friday 7 Saturday 8 Sunday 9 Monday dtype: object ============================== s.dt.days_in_month ============================== 0 31.0 1 31.0 2 31.0 3 NaN 4 31.0 5 31.0 6 31.0 7 31.0 8 31.0 9 31.0 dtype: float64 ============================= s.dt.is_month_start ============================== 0 True 1 False 2 False 3 False 4 False 5 False 6 False 7 False 8 False 9 False dtype: bool

4 数据规整:连接,联合,重塑

4.1 分层索引

分层索引提供了一种在 低维度的形式中处理高维度数据 的方式,它使用 pd.MultiIndex 类来表示。

比如在分析股票数据:

- 一级行索引可以是日期

- 二级行索引可以是股票代码

- 列索引可以是股票的交易量,开盘价,收盘价等等

这样就可以把多个股票放在同一个时间维度下进行考察和分析。

s = pd.Series(np.random.randn(9),

index=[['a', 'a', 'a', 'b', 'b', 'c', 'c', 'd', 'd'],

[1, 2, 3, 1, 3, 1, 2, 2, 3]])

log("s", s)

log("s.index", s.index)

====================================== s =======================================

a 1 1.007189

2 -1.296221

3 0.274992

b 1 0.228913

3 1.352917

c 1 0.886429

2 -2.001637

d 2 -0.371843

3 1.669025

dtype: float64

=================================== s.index ====================================

MultiIndex(levels=[['a', 'b', 'c', 'd'], [1, 2, 3]],

labels=[[0, 0, 0, 1, 1, 2, 2, 3, 3], [0, 1, 2, 0, 2, 0, 1, 1, 2]])

选取数据子集:

log("s['b']", s['b'])

log("s['b':'c']", s['b':'c'])

log("s.loc[['b', 'd']]", s.loc[['b', 'd']])

# 选择内部层级

log("s.loc[:, 2]", s.loc[:, 2])

==================================== s['b'] ==================================== 1 0.228913 3 1.352917 dtype: float64 ================================== s['b':'c'] ================================== b 1 0.228913 3 1.352917 c 1 0.886429 2 -2.001637 dtype: float64 ============================== s.loc[['b', 'd']] =============================== b 1 0.228913 3 1.352917 d 2 -0.371843 3 1.669025 dtype: float64 ================================= s.loc[:, 2] ================================== a -1.296221 c -2.001637 d -0.371843 dtype: float64

4.1.1 层级交换(swaplevel)与排序(sort_index)

swaplevel 接收两个层级序号或层级名称,返回一个进行了层级变更的新对象, 数据是不变的 。

df = pd.DataFrame(np.arange(12).reshape((4, 3)),

index=[['a', 'a', 'b', 'b'], [1, 2, 1, 2]],

columns=[['Ohio', 'Ohio', 'Colorado'], ['Green', 'Red', 'Green']]

)

df.index.names = ['key1', 'key2']

df.columns.names = ['state', 'color']

log("df", df)

log("df.swaplevel('key1', 'key2')", df.swaplevel('key1', 'key2'))

====================================== df ======================================

state Ohio Colorado

color Green Red Green

key1 key2

a 1 0 1 2

2 3 4 5

b 1 6 7 8

2 9 10 11

========================= df.swaplevel('key1', 'key2') =========================

state Ohio Colorado

color Green Red Green

key2 key1

1 a 0 1 2

2 a 3 4 5

1 b 6 7 8

2 b 9 10 11

sort_index 只能在单一层级上对数据进行排序。

log("df.sort_index(level=1)", df.sort_index(level=1))

log("df.swaplevel(0, 1).sort_index(level=0)", df.swaplevel(0, 1).sort_index(level=0))

============================ df.sort_index(level=1) ============================

state Ohio Colorado

color Green Red Green

key1 key2

a 1 0 1 2

b 1 6 7 8

a 2 3 4 5

b 2 9 10 11

==================== df.swaplevel(0, 1).sort_index(level=0) ====================

state Ohio Colorado

color Green Red Green

key2 key1

1 a 0 1 2

b 6 7 8

2 a 3 4 5

b 9 10 11

如果索引按照字典顺序从最外层开始排序,则数据选择性能会更好

4.1.2 列索引转为行索引 (set_index)

df = pd.DataFrame({

"a": range(7),

"b": range(7, 0, -1),

"c": ['one', 'one', 'one', 'two', 'two', 'two', 'two'],

"d": [0, 1, 2, 0, 1, 2, 3]

})

log("df", df)

log("df.set_index(['c', 'd'])", df.set_index(['c', 'd']))

====================================== df ======================================

a b c d

0 0 7 one 0

1 1 6 one 1

2 2 5 one 2

3 3 4 two 0

4 4 3 two 1

5 5 2 two 2

6 6 1 two 3

=========================== df.set_index(['c', 'd']) ===========================

a b

c d

one 0 0 7

1 1 6

2 2 5

two 0 3 4

1 4 3

2 5 2

3 6 1

默认情况下,用过行索引的列会从 DataFrame 中删除,也可以选择留下:

log("df.set_index(['c', 'd'], drop=False)", df.set_index(['c', 'd'], drop=False))

===================== df.set_index(['c', 'd'], drop=False) =====================

a b c d

c d

one 0 0 7 one 0

1 1 6 one 1

2 2 5 one 2

two 0 3 4 two 0

1 4 3 two 1

2 5 2 two 2

3 6 1 two 3

4.1.3 行索引转为列索引 (reset_index)

reset_index 是 set_index 的 反操作 ,分层索引的行索引层级会被移动为列索引。

df = pd.DataFrame({

"a": range(7),

"b": range(7, 0, -1),

"c": ['one', 'one', 'one', 'two', 'two', 'two', 'two'],

"d": [0, 1, 2, 0, 1, 2, 3]

})

log("df", df)

df2 = df.set_index(['c', 'd'])

log("df2", df2)

log("df2.reset_index()", df2.reset_index())

====================================== df ======================================

a b c d

0 0 7 one 0

1 1 6 one 1

2 2 5 one 2

3 3 4 two 0

4 4 3 two 1

5 5 2 two 2

6 6 1 two 3

===================================== df2 ======================================

a b

c d

one 0 0 7

1 1 6

2 2 5

two 0 3 4

1 4 3

2 5 2

3 6 1

============================== df2.reset_index() ===============================

c d a b

0 one 0 0 7

1 one 1 1 6

2 one 2 2 5

3 two 0 3 4

4 two 1 4 3

5 two 2 5 2

6 two 3 6 1

4.2 连接与合并

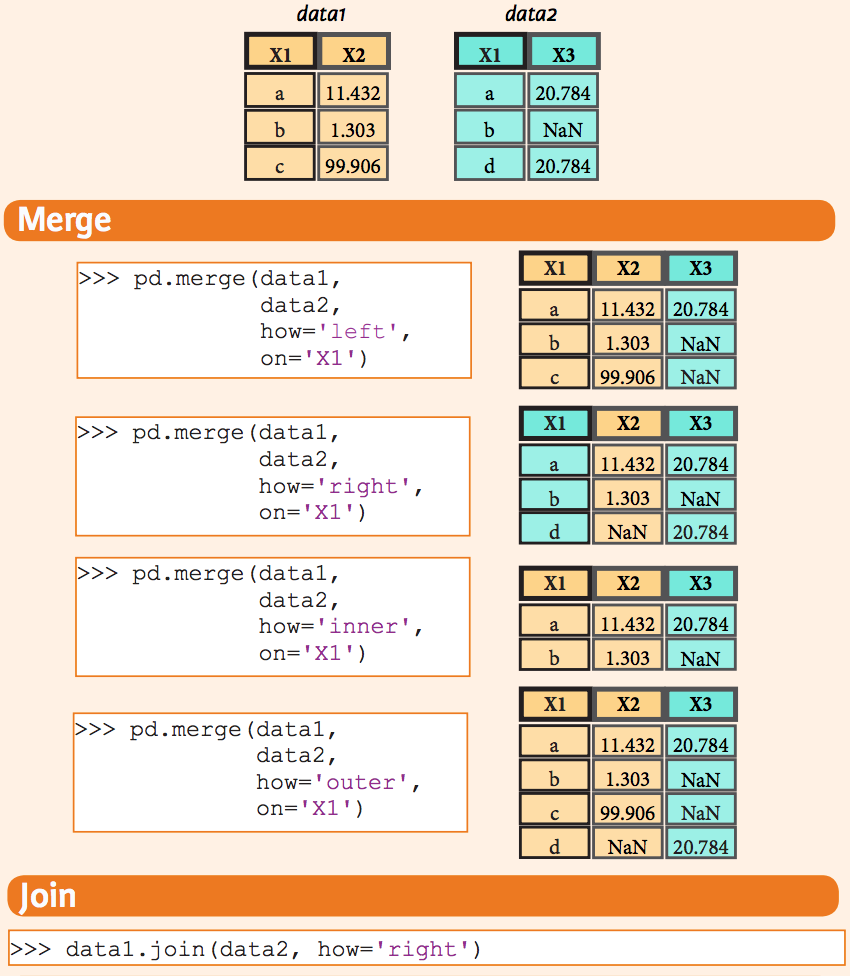

4.2.1 merge

4.2.1.1 基本用法

合并或连接操作是通过一个或多个键,连接行来联合数据集。

df1 = pd.DataFrame({

"key": list('bbacaab'),

"data1": range(7)

})

df2 = pd.DataFrame({

"key": list('abd'),

"data2": range(3)

})

log("df1", df1)

log("df2", df2)

log("pd.merge(df1, df2)", pd.merge(df1, df2))

===================================== df1 ====================================== key data1 0 b 0 1 b 1 2 a 2 3 c 3 4 a 4 5 a 5 6 b 6 ===================================== df2 ====================================== key data2 0 a 0 1 b 1 2 d 2 ============================== pd.merge(df1, df2) ============================== key data1 data2 0 b 0 1 1 b 1 1 2 b 6 1 3 a 2 0 4 a 4 0 5 a 5 0

若没有指定在哪一列上进行连接,即没有指定连接的键信息, merge 会自动将 重叠列名作为连接键 。

显示指定连接键才是好的做法:

pd.merge(df1, df2, on='key')

如果每个对象的列名是不同的,可以分别为它们指定列名:

df1 = pd.DataFrame({

"lkey": list('bbacaab'),

"data1": range(7)

})

df2 = pd.DataFrame({

"rkey": list('abd'),

"data2": range(3)

})

log("df1", df1)

log("df2", df2)

log("pd.merge(df1, df2, left_on='lkey', right_on='rkey')", pd.merge(df1, df2, left_on='lkey', right_on='rkey'))

===================================== df1 ====================================== lkey data1 0 b 0 1 b 1 2 a 2 3 c 3 4 a 4 5 a 5 6 b 6 ===================================== df2 ====================================== rkey data2 0 a 0 1 b 1 2 d 2 ============= pd.merge(df1, df2, left_on='lkey', right_on='rkey') ============== lkey data1 rkey data2 0 b 0 b 1 1 b 1 b 1 2 b 6 b 1 3 a 2 a 0 4 a 4 a 0 5 a 5 a 0

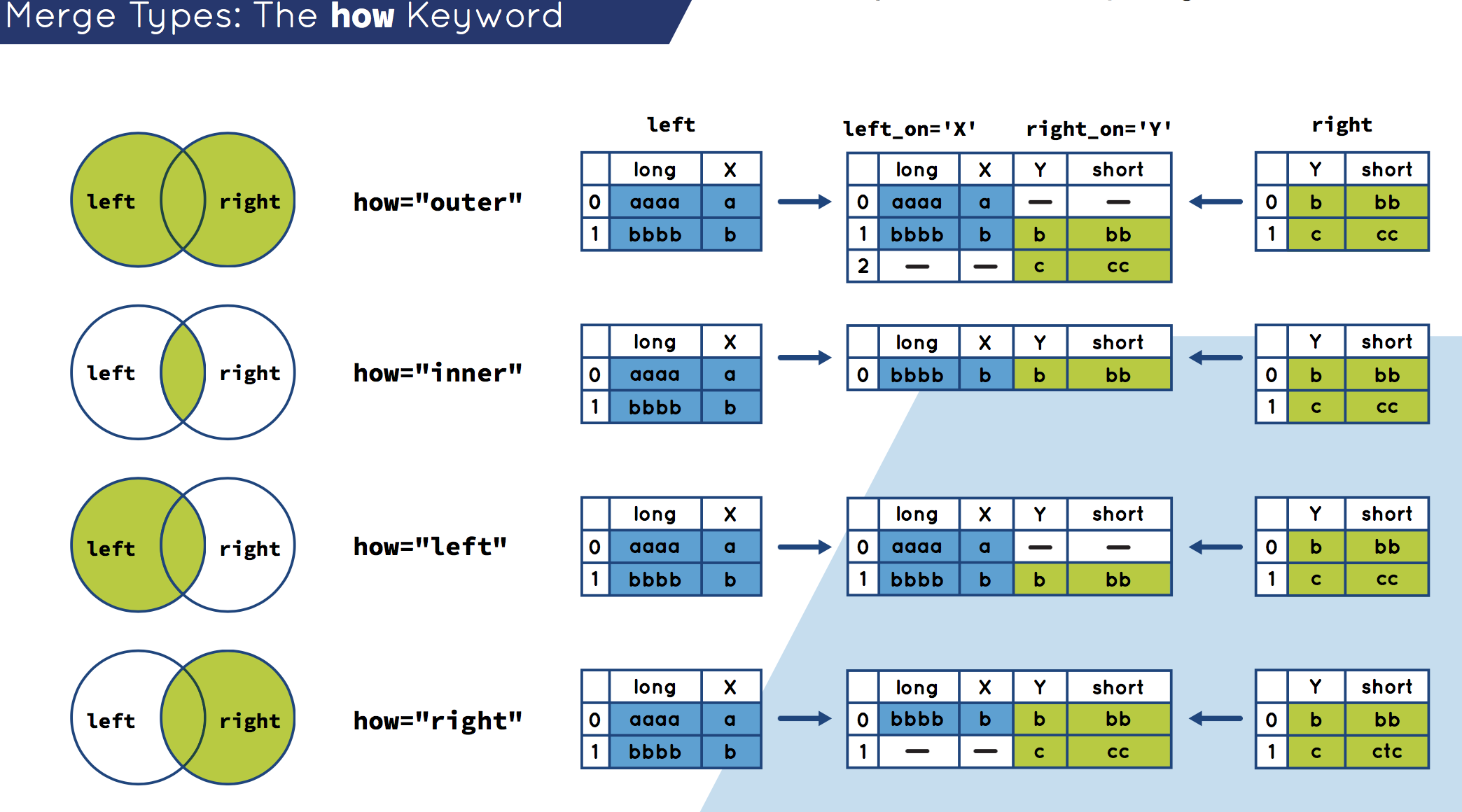

4.2.1.2 合并类型(inner,outer,left,right)

默认情况下, merge 做的是内连接。

多对多的连接是行的笛卡尔积 :

df1 = pd.DataFrame({

"key": list('bbacab'),

"data1": range(6)

})

df1.index = ['one', 'two', 'three', 'four', 'five', 'six']

df2 = pd.DataFrame({

"key": list('ababd'),

"data2": range(5)

})

log("df1", df1)

log("df2", df2)

log("pd.merge(df1, df2, on='key', how='left')", pd.merge(df1, df2, on='key', how='left'))

===================================== df1 ======================================

key data1

one b 0

two b 1

three a 2

four c 3

five a 4

six b 5

===================================== df2 ======================================

key data2

0 a 0

1 b 1

2 a 2

3 b 3

4 d 4

=================== pd.merge(df1, df2, on='key', how='left') ===================

key data1 data2

0 b 0 1.0

1 b 0 3.0

2 b 1 1.0

3 b 1 3.0

4 a 2 0.0

5 a 2 2.0

6 c 3 NaN

7 a 4 0.0

8 a 4 2.0

9 b 5 1.0

10 b 5 3.0

如上例所示,进行列-列连接时,索引对象会被丢弃。

4.2.1.3 按索引合并

left1 = pd.DataFrame({

"key": list('abaabc'),

"value": range(6)

})

right1 = pd.DataFrame({

"group_val": [3.5, 7]

}, index=['a', 'b'])

log("left1", left1)

log("right1", right1)

log("pd.merge(left1, right1, left_on='key', right_index=True)", pd.merge(left1, right1, left_on='key', right_index=True))

==================================== left1 ===================================== key value 0 a 0 1 b 1 2 a 2 3 a 3 4 b 4 5 c 5 ==================================== right1 ==================================== group_val a 3.5 b 7.0 =========== pd.merge(left1, right1, left_on='key', right_index=True) =========== key value group_val 0 a 0 3.5 2 a 2 3.5 3 a 3 3.5 1 b 1 7.0 4 b 4 7.0

多层索引的情况下,在索引上的连接是一个隐式的多键合并, 必须以列表的方式指明合并所需的多个列:

lefth = pd.DataFrame(

{

"key1": ['Ohio', 'Ohio', 'Ohio', 'Nevada', 'Nevada'],

"key2": [2000, 2001, 2002, 2001, 2002],

"data": np.arange(5)

}

)

righth = pd.DataFrame(np.arange(12).reshape((6, 2)),

index=[

['Nevada', 'Nevada', 'Ohio', 'Ohio', 'Ohio', 'Ohio'],

[2001, 2000, 2000, 2000, 2001, 2002]

],

columns=['event1', 'event2']

)

log("lefth", lefth)

log("righth", righth)

log("pd.merge(lefth, righth, left_on=['key1', 'key2'], right_index=True)", pd.merge(lefth, righth, left_on=['key1', 'key2'], right_index=True))

==================================== lefth =====================================

key1 key2 data

0 Ohio 2000 0

1 Ohio 2001 1

2 Ohio 2002 2

3 Nevada 2001 3

4 Nevada 2002 4

==================================== righth ====================================

event1 event2

Nevada 2001 0 1

2000 2 3

Ohio 2000 4 5

2000 6 7

2001 8 9

2002 10 11

===== pd.merge(lefth, righth, left_on=['key1', 'key2'], right_index=True) ======

key1 key2 data event1 event2

0 Ohio 2000 0 4 5

0 Ohio 2000 0 6 7

1 Ohio 2001 1 8 9

2 Ohio 2002 2 10 11

3 Nevada 2001 3 0 1

两边都使用索引合并:

left2 = pd.DataFrame([[1, 2], [3, 4], [5, 6]],

index=list('ace'),

columns=['Ohio', 'Nevada']

)

right2 = pd.DataFrame([[7, 8], [9, 10], [11, 12], [13, 14]],

index=list('bcde'),

columns=['Missouri', 'Alabama']

)

log("left2", left2)

log("right2", right2)

log("pd.merge(left2, right2, how='outer', left_index=True, right_index=True)", pd.merge(left2, right2, how='outer', left_index=True, right_index=True))

==================================== left2 ===================================== Ohio Nevada a 1 2 c 3 4 e 5 6 ==================================== right2 ==================================== Missouri Alabama b 7 8 c 9 10 d 11 12 e 13 14 === pd.merge(left2, right2, how='outer', left_index=True, right_index=True) ==== Ohio Nevada Missouri Alabama a 1.0 2.0 NaN NaN b NaN NaN 7.0 8.0 c 3.0 4.0 9.0 10.0 d NaN NaN 11.0 12.0 e 5.0 6.0 13.0 14.0

4.2.1.4 join

DataFrame 有一个方便的 join 方法,底层仍然是使用 merge ,用于 按照索引合并 。(_默认是左连接_)

之前的例子中,可以这样写:

left2 = pd.DataFrame([[1, 2], [3, 4], [5, 6]],

index=list('ace'),

columns=['Ohio', 'Nevada']

)

right2 = pd.DataFrame([[7, 8], [9, 10], [11, 12], [13, 14]],

index=list('bcde'),

columns=['Missouri', 'Alabama']

)

log("left2", left2)

log("right2", right2)

log("left2.join(right2, how='outer')", left2.join(right2, how='outer'))

==================================== left2 ===================================== Ohio Nevada a 1 2 c 3 4 e 5 6 ==================================== right2 ==================================== Missouri Alabama b 7 8 c 9 10 d 11 12 e 13 14 ======================= left2.join(right2, how='outer') ======================== Ohio Nevada Missouri Alabama a 1.0 2.0 NaN NaN b NaN NaN 7.0 8.0 c 3.0 4.0 9.0 10.0 d NaN NaN 11.0 12.0 e 5.0 6.0 13.0 14.0

join 也支持使用连接键:

left1 = pd.DataFrame({

"key": list('abaabc'),

"value": range(6)

})

right1 = pd.DataFrame({

"group_val": [3.5, 7]

}, index=['a', 'b'])

log("left1", left1)

log("right1", right1)

log("left1.join(right1, on='key')", left1.join(right1, on='key'))

==================================== left1 ===================================== key value 0 a 0 1 b 1 2 a 2 3 a 3 4 b 4 5 c 5 ==================================== right1 ==================================== group_val a 3.5 b 7.0 ========================= left1.join(right1, on='key') ========================= key value group_val 0 a 0 3.5 1 b 1 7.0 2 a 2 3.5 3 a 3 3.5 4 b 4 7.0 5 c 5 NaN

another = pd.DataFrame(

[[7, 8], [9, 10], [11, 12], [16, 17]],

index=list('acef'),

columns=['New York', 'Oregon']

)

left2 = pd.DataFrame([[1, 2], [3, 4], [5, 6]],

index=list('ace'),

columns=['Ohio', 'Nevada']

)

right2 = pd.DataFrame([[7, 8], [9, 10], [11, 12], [13, 14]],

index=list('bcde'),

columns=['Missouri', 'Alabama']

)

log("another", another)

log("left2", left2)

log("right2", right2)

log("left2.join([right2, another])", left2.join([right2, another]))

=================================== another ==================================== New York Oregon a 7 8 c 9 10 e 11 12 f 16 17 ==================================== left2 ===================================== Ohio Nevada a 1 2 c 3 4 e 5 6 ==================================== right2 ==================================== Missouri Alabama b 7 8 c 9 10 d 11 12 e 13 14 ======================== left2.join([right2, another]) ========================= Ohio Nevada Missouri Alabama New York Oregon a 1 2 NaN NaN 7 8 c 3 4 9.0 10.0 9 10 e 5 6 13.0 14.0 11 12

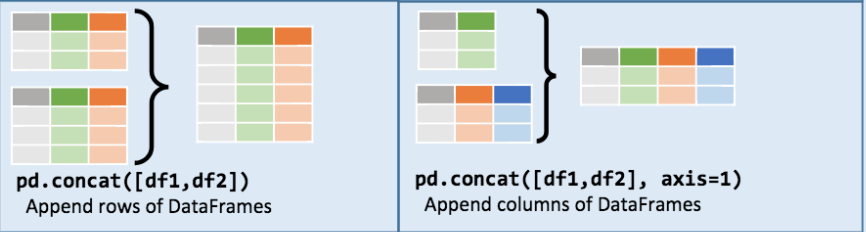

4.2.2 concat

concat 方法本质其实就是按索引合并 ,默认情况下 concat 方法是沿着 axis = 0 的轴向生效的,

生成另一个 Series ;如传递 axis = 1 , 返回的结果则是 DataFrame 。

s1 = pd.Series([0, 1], index=['a', 'b'])

s2 = pd.Series([2, 3, 4], index=['c', 'd', 'e'])

s3 = pd.Series([5, 6], index=['f', 'g'])

log("s1", s1)

log("s2", s2)

log("s3", s3)

log("pd.concat([s1, s2, s3])", pd.concat([s1, s2, s3]))

log("pd.concat([s1, s2, s3], axis=1)", pd.concat([s1, s2, s3], axis=1))

====================================== s1 ======================================

a 0

b 1

dtype: int64

====================================== s2 ======================================

c 2

d 3

e 4

dtype: int64

====================================== s3 ======================================

f 5

g 6

dtype: int64

=========================== pd.concat([s1, s2, s3]) ============================

a 0

b 1

c 2

d 3

e 4

f 5

g 6

dtype: int64

======================= pd.concat([s1, s2, s3], axis=1) ========================

0 1 2

a 0.0 NaN NaN

b 1.0 NaN NaN

c NaN 2.0 NaN

d NaN 3.0 NaN

e NaN 4.0 NaN

f NaN NaN 5.0

g NaN NaN 6.0

concat 默认使用 外连接 :

s4 = pd.concat([s1, s3])

log("s4", s4)

log("pd.concat([s1, s4], axis=1)", pd.concat([s1, s4], axis=1))

log("pd.concat([s1, s4], axis=1, join='inner')", pd.concat([s1, s4], axis=1, join='inner'))

====================================== s4 ======================================

a 0

b 1

f 5

g 6

dtype: int64

========================= pd.concat([s1, s4], axis=1) ==========================

0 1

a 0.0 0

b 1.0 1

f NaN 5

g NaN 6

================== pd.concat([s1, s4], axis=1, join='inner') ===================

0 1

a 0 0

b 1 1

4.2.2.1 join_axes

可以使用 join_axes 来指定其他轴向的轴:

log("pd.concat([s1, s4], axis=1, join_axes=[['a', 'c', 'b', 'e']])", pd.concat([s1, s4], axis=1, join_axes=[['a', 'c', 'b', 'e']]))

======== pd.concat([s1, s4], axis=1, join_axes=[['a', 'c', 'b', 'e']]) =========

0 1

a 0.0 0.0

c NaN NaN

b 1.0 1.0

e NaN NaN

4.2.2.2 区分拼接在一起的各部分

result = pd.concat([s1, s2, s3], keys=['one', 'two', 'three'])

log("result", result)

log("result.unstack()", result.unstack())

==================================== result ====================================

one a 0

b 1

two c 2

d 3

e 4

three f 5

g 6

dtype: int64

=============================== result.unstack() ===============================

a b c d e f g

one 0.0 1.0 NaN NaN NaN NaN NaN

two NaN NaN 2.0 3.0 4.0 NaN NaN

three NaN NaN NaN NaN NaN 5.0 6.0

log("pd.concat([s1, s2, s3], axis=1, keys=['one', 'two', 'three'])", pd.concat([s1, s2, s3], axis=1, keys=['one', 'two', 'three']))

======== pd.concat([s1, s2, s3], axis=1, keys=['one', 'two', 'three']) ========= one two three a 0.0 NaN NaN b 1.0 NaN NaN c NaN 2.0 NaN d NaN 3.0 NaN e NaN 4.0 NaN f NaN NaN 5.0 g NaN NaN 6.0

应用于 DataFrame 对象:

df1 = pd.DataFrame(np.arange(6).reshape((3, 2)),

index=list('abc'),

columns=['one', 'two']

)

df2 = pd.DataFrame(5 + np.arange(4).reshape((2, 2)),

index=list('ac'),

columns=['three', 'four']

)

log("df1", df1)

log("df2", df2)

log("pd.concat([df1, df2])", pd.concat([df1, df2]))

log("pd.concat([df1, df2], keys=['level1', 'level2'])", pd.concat([df1, df2], keys=['level1', 'level2']))

log("pd.concat([df1, df2], axis=1)", pd.concat([df1, df2], axis=1))

log("pd.concat([df1, df2], axis=1, keys=['level1', 'level2'])", pd.concat([df1, df2], axis=1, keys=['level1', 'level2']))

===================================== df1 ======================================

one two

a 0 1

b 2 3

c 4 5

===================================== df2 ======================================

three four

a 5 6

c 7 8

============================ pd.concat([df1, df2]) =============================

four one three two

a NaN 0.0 NaN 1.0

b NaN 2.0 NaN 3.0

c NaN 4.0 NaN 5.0

a 6.0 NaN 5.0 NaN

c 8.0 NaN 7.0 NaN

=============== pd.concat([df1, df2], keys=['level1', 'level2']) ===============

four one three two

level1 a NaN 0.0 NaN 1.0

b NaN 2.0 NaN 3.0

c NaN 4.0 NaN 5.0

level2 a 6.0 NaN 5.0 NaN

c 8.0 NaN 7.0 NaN

======================== pd.concat([df1, df2], axis=1) =========================

one two three four

a 0 1 5.0 6.0

b 2 3 NaN NaN

c 4 5 7.0 8.0

=========== pd.concat([df1, df2], axis=1, keys=['level1', 'level2']) ===========

level1 level2

one two three four

a 0 1 5.0 6.0

b 2 3 NaN NaN

c 4 5 7.0 8.0

4.2.2.3 ignore_index

df1 = pd.DataFrame(np.random.randn(3, 4), columns=list('abcd'))

df2 = pd.DataFrame(np.random.randn(2, 3), columns=list('bda'))

log("df1", df1)

log("df2", df2)

log("pd.concat([df1, df2])", pd.concat([df1, df2]))

log("pd.concat([df1, df2], ignore_index=True)", pd.concat([df1, df2], ignore_index=True))

===================================== df1 ======================================

a b c d

0 0.308352 -0.010026 1.317371 -0.614389

1 0.363210 1.110404 -0.240416 -0.455806

2 0.926422 -0.429935 0.196401 -1.392373

===================================== df2 ======================================

b d a

0 -1.277329 -0.447157 0.619382

1 -0.210343 -0.526383 -1.627948

============================ pd.concat([df1, df2]) =============================

a b c d

0 0.308352 -0.010026 1.317371 -0.614389

1 0.363210 1.110404 -0.240416 -0.455806